Choosing a RAID Configuration For Your Home Server

Brian and I have been writing about our adventures with home servers for a long time. He’s been giving away DIY NAS builds for years, and he’s explained his ZFS disk configuration. I’ve been running a little virtual machine host at home, and I’ve explained my home server choices on my blog, too.

Why should I trust your opinions, Pat?

You shouldn’t. You should do plenty of research. Your data is valuable. In fact, I would wager that your data is more valuable than you even realize!

I haven’t worked a regular 9 to 5 job in quite a long time, but when I did, this was a big part of my job. Buying servers. Deciding how much disk we’d need to buy. Figuring out what sort of disk configuration would provide optimal or even just adequate performance for the task at hand.

You shouldn’t trust me just because I used to do this for a living! You should do your own research. I hope I can at least provide you with a good starting point.

- Pat’s NAS Building Tips and Rules of Thumb

- 40-Gigabit Infiniband: An Inexpensive Performance Boost For Your Home Network

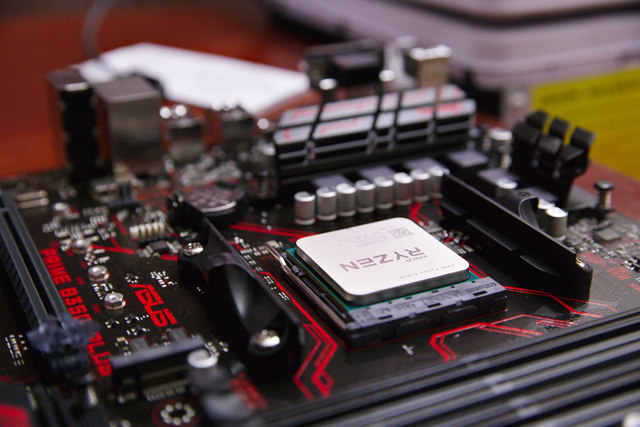

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- Can You Run A NAS In A Virtual Machine? at patshead.com

- DIY NAS: 2024 Edition and the 2024 EconoNAS! at briancmoses.com

- Upgrading My Home Network With MokerLink 2.5-Gigabit Switches

- I Bought The Cheapest 2.5-Gigabit USB Ethernet Adapters And They Are Awesome!

What are you doing with your home server?

I’m just going to take a guess. You’re storing video to stream to TVs around your house, or you’re into photography, and you’re storing lots of RAW photos.

You can skip the rest of this blog post. You should use RAID 6 or RAID-Z2. The easy thing to do is just use FreeNAS, set up RAID-Z2, and forget about it. These RAID levels have write performance issues, but they won’t get in your way.

In fact, most people with a NAS at home won’t be impacted by the slower write performance of RAID-Z2 or RAID 6. You’re already using large, inexpensive, slow disks–anything that isn’t an SSD is extremely slow now!

I'm slowly preparing to #3dprint my own #NAS case, hopefully with a bit of my own touches! I sat aside some time today to start printing/checking Toby K's calibration objects. Want to know more? Check it out at: https://t.co/NOPRpM5rEA pic.twitter.com/IkzLFGfo8M

— Brian Moses (@briancmoses) April 14, 2019

Your bottleneck is going to be your network, and that network is probably Gigabit Ethernet. As long as you’re mostly working with large files, like videos, your slow disks and choice of RAID configuration aren’t slowing things down. I’ll explain more about that later, if you’re interested in those details.

Blu-Ray tops out at 40 megabits per second. Blu-Ray for UHD will max out at 128 megabits per second. Those are only megabits. A single hard drive can sustain speeds over 150 megabytes per second, and a RAID-Z2 or RAID 6 will easily have read speeds several times higher.

You can’t just divide the speed of your Gigabit Ethernet interface by 40 or 128 megabits and decide that your server can handle streaming 8 UHD Blu-Ray streams or 25 HD Blu-Ray streams. There’s overhead. There will be contention for disk as your server needs to seek as it jumps from one video to another.

The important point to remember is that your RAID configuration isn’t likely to be the limiting factor in how much UHD content you can stream around the house!

For what it is worth, current UHD streams from Amazon and Netflix are currently in the 20 megabit per second range.

- Pat’s NAS Building Tips and Rules of Thumb

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- Can You Run A NAS In A Virtual Machine? at patshead.com

- DIY NAS: 2019 Edition at briancmoses.com

But I’m not just serving video to a dozen TVs, and I heard RAID 6 is slow!

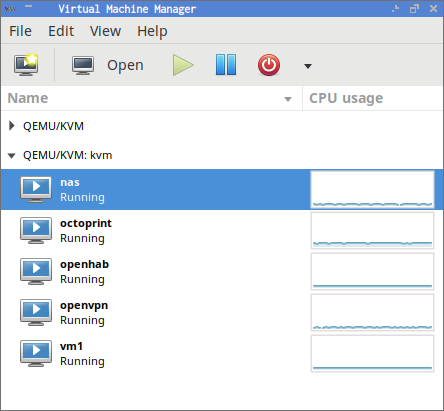

Maybe you’re not just running a simple NAS server. Maybe you have a virtual machine server at home, like my KVM server. Your server is running a handful of virtual machines. One VM is running Octoprint for your 3D printer. Another is running Plex, and it has to transcode video. You could be doing anything!

Odds are good that you can still get away with a simple, slow RAID 6 or RAID-Z2 configuration. I’m using something a little different, but not for performance reasons. None of my VMs generate much disk activity. I just wanted to buy fewer disks to save a few bucks.

- Can You Run A NAS In A Virtual Machine? at patshead.com

I just want to spend even more money! What can I do to get even better performance?!

The first thing you can spend money on is an SSD cache. I’m using a mirrored pair of Samsung EVO 850 SSDs in my lvm-cache setup. You can do something similar with FreeNAS and ZFS, though I’m not as well versed on how SSD caching on ZFS works.

Let’s use RAID 6 as an example. In the worst case scenario, RAID 6 has terrible write performance. Let’s say you need to modify a single 4K sector. If nothing is cached in RAM, your RAID system needs to read a chunk from every disk, modify that 4K sector in RAM, recompute the checksum for that chunk, then write that data back out to every single disk. That is a read and a write to every disk in the array, and what your RAID system uses for a chunk is probably several megabytes.

It has been about four days, and promotions to the lvm-cache have slowed, but my write hit rate has gone back up over 50%. I need to get out for a long day of flying to see if this actually improves my video editing experience as much as I remember that it should! pic.twitter.com/jj2LKDpurS

— Pat Regan (@patsheadcom) May 17, 2019

This can be a nightmare for performance when you have lots of small random writes, like you might experience on a busy database server. It only becomes a nightmare when you run out of cache. If those chunks are already cached in RAM, they don’t need to be read from the each disk before the write can happen. The checksum can simply be recomputed, and the RAID system will just need to perform the write operations.

You probably have enough RAM to cache a lot of writes, especially if you’re following FreeNAS’s recommendation of 1 GB of RAM per TB of storage. Everything will seem fine until you write enough data all at once that your cache fills up. Then your write operation will slow down to the speed of your disks, and you might start to notice. ZFS is a copy-on-write filesystem, so it sidesteps this problem to an extent, but it will eventually catch up with you.

At this point, you can buy more RAM, or you can set up an SSD cache. I chose to use an SSD cache in my server. Flash disk is cheaper than RAM. My own lvm-cache is around 200 GB. This means that I can push more 200 GB of data to my NAS before my speeds will be impacted by the speed of my slower mechanical disks.

What I’m about to say isn’t exactly the truth, but it is a reasonable rule of thumb most of the time. RAID 6 read performance is comparable to RAID 0. RAID 6 write performance is usually on par with the speed of a single disk.

- Using dm-cache / lvmcache On My Homelab Virtual Machine Host To Improve Disk Performance at patshead.com

Are you saying I need to buy SSDs to use as a cache?!

No. You probably don’t need an SSD cache. Especially if your bottleneck tends to be your Gigabit Ethernet network.

I have one use case where I can feel my SSD cache doing its job, and I’m not even convinced that the small boost in performance was worth the cost of the SSDs. You can buy quite a bit more mechanical storage with the cash you save by not using cache drives. Skipping the SSD cache would have made for a much less interesting blog post!

In fact, I would recommend that you spend the money you saved by skipping the SSD cache on your backup solution.

- Using dm-cache / lvmcache On My Homelab Virtual Machine Host To Improve Disk Performance at patshead.com

RAID is not a backup

I say this a lot, because it is important. RAID is not a backup. RAID is used to prevent or reduce downtime.

When a disk fails in the middle of the night, my servers just keep chugging along. If you have hot swap bays, you can replace the dead drive without any interruption of service. This is important when your job requires you to provide five nines of uptime.

Even if you have to shut the server down in order to open it up to replace a drive, you’re still greatly reducing downtime. Without the RAID, you’d be restoring from backups, and that can take a long, long time.

There are all sorts of things that can and do happen that a RAID won’t save you from. A SATA or SAS controller or drive can fail and corrupt the data on all your disks. A new version of Premiere or Photoshop may wind up corrupting some of your important photos. You could get hit with some sort of ransomware.

More likely, you’ll be stupid and accidentally delete something important. We’ve all done something like that.

I back up all my data using Dropbox-style sync software called Seafile. I have 90 days of file history on the server. My Seafile service is hosted in Europe, so my data is definitely off-site. This also gives me local copies of all my data on both my desktop and laptop. Every change is pushed to the server within a minute or so, and those changes are pulled back to my other computers just as quickly. I’ve written about my backup setup on my blog.

Brian uses a different backup strategy. He stores all his important data on his DIY NAS server. His FreeNAS server takes periodic ZFS snapshots, and he has regularly scheduled jobs that backup his data to Backblaze.

- My Backup Strategy for 2013 - Real Time Off-Site Backups at patshead.com

- Backing up my FreeNAS to Backblaze B2 at briancmoses.com

What if RAID 6 or RAID-Z2 with SSD caching isn’t fast enough?

This is when you can think about moving up to RAID 10. As far as performance goes, here’s roughly what you can expect out of RAID 6 and RAID 10.

- Both RAID 10 and RAID 6 will give you much faster sequential and random read speeds compared to a single disk

- RAID 10 will improve your sequential and random write speeds

- RAID 6 will improve your sequential read speeds

- RAID 6 write performance is complicated

If your use case requires good random access performance, you either need to use SSD caching and hope it fits your workload, or you need to just spend a lot of money and just use SSDs. Random reads and writes to SSDs are up to two orders of magnitude faster than mechanical disks. There’s just no comparison.

If you’re in a position where your homelab virtual machine server or home NAS server isn’t fast enough with RAID 6 or RAID-Z2, you are most definitely in the extreme minority. Stop by the Butter, What?! Discord server and talk to me about what you’re doing. It is probably quite interesting!

That said, correcting the write performance problem of RAID 6 isn’t all that expensive, unless your storage needs are quite large. RAID 10 becomes less economical as you add disks.

RAID 6 dedicates two disks’ worth of storage to redundancy. RAID 10 dedicates half your disks to redundancy.

If you only have four disks, RAID 6 and RAID 10 provide equal amounts of storage. As you add disks, your cost per TB of storage goes up quickly with RAID 10. As long as you’re only using 8 or 10 disks, though, the extra cost won’t be ridiculous.

Is it OK if I tell a lie about RAID 10 performance?

I already lied to you about the performance of a RAID 6 array earlier. I’m about to give you a rule of thumb about RAID 10 performance that isn’t exactly the truth.

With the right implementation, RAID 10 read performance can be just about as fast as RAID 0. Write performance will be about half as fast as RAID 0.

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- DIY NAS: 2019 Edition at briancmoses.com

What if Gigabit Ethernet isn’t fast enough?

Gigabit Ethernet used to be pretty quick. For the most part, it still is. Like I said earlier, there’s room to stream dozens of HD videos down a single run of CAT-5E. A network that is nearly as fast as a hard drive sounds alright, doesn’t it?

Sure, you can access a computer on the other side of your house just as quickly as you can access a hard drive on the computer you’re sitting in front of. Unfortunately, hard drives just aren’t fast anymore. SSDs can sustain a ridiculous number of I/O operations per second (IOPS), and a good NVMe drive can almost compete with the speed of your RAM!

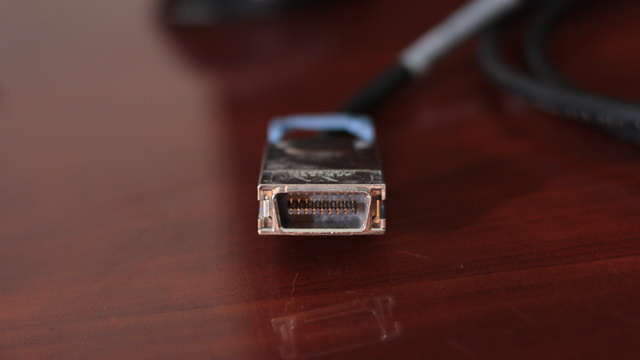

I wanted my NAS virtual machine to feel as fast as accessing a disk that’s installed in my desktop computer. They live in the same room, so I bought some old Infiniband hardware. The CX4 cables I have to use can only stretch a few meters, but that’s OK, because my NAS is in the same room as my desktop computer.

Old server hardware is cheap. I spent quite a bit less than $100 on a pair of dual-port 20 gigabit Infinband cards and a CX4 cable. These cards let my computers communicate at the speed of a 4x PCIe 2.0 slot. With overheard, that means I can move data around at about 8 gigabits per second.

I’ve measured Samba file transfer speeds of around 700 megabytes per second. There are faster NVMe drives, but this is a faster than the SATA SSD installed in my desktop. Newer Infiniband hardware keeps dropping in price. You could probably double my speeds today without spending any more money than I did!

Configuring Infiniband is a bit more complicated than Ethernet, though it has advantages if you’re using something like iSCSI. For a simpler setup that’s roughly as fast, you should check out Brian’s 10-Gigabit Ethernet setup. He bought old 10-Gigabit Ethernet cards that use direct connect SFP+ cables. He spent about $120 to connect three computers in a star topology.

If you need to get across the house, though, you’re probably better off buying modern 10-Gigabit Ethernet hardware that runs on CAT-6. It costs more, but it isn’t ridiculously expensive!

- Building a Cost-Conscious, Faster-Than-Gigabit Network at briancmoses.com

- Maturing my Inexpensive 10Gb network with the QNAP QSW-308S at Brian’s Blog

- 40-Gigabit Infiniband: An Inexpensive Performance Boost For Your Home Network

If I can lose half the disks in my RAID 10 without losing data, why would I use RAID 6?

It sounds promising, doesn’t it? If you have 8 disks, 3 disks have to die to for your RAID 6 to fail, but you can lose up to 5 disks in your RAID 10 before experiencing a failure.

That’s what most people think, but they’re not noticing an important detail. Your RAID 6 or RAID-Z2 array can lose any two disks without compromising your data. Your RAID 10 array is effectively built out of mirrored pairs. You can think of RAID 10 and a RAID 0 array built on top of a bunch little of RAID 1 arrays.

Sure, it is possible to lose 4 disks in this hypothetical 8 disk RAID 10 array and still have a working array. They have to be the right 4 disks, though. If you lose two disks that are part of the same mirror, your whole RAID 10 is toast.

FreeNAS and ZFS vs. Linux and MD RAID

ZFS was designed by Sun Microsystems to be used with big, expensive servers. Most of us don’t use big, expensive servers at home. We can’t afford to spends tens of thousands of dollars, and they’re really loud!

Sun expected you to fill your server up with expensive SAS disks. If you buy a chassis with 16 or 32 drive bays, you fill them up with drives, even if they cost $1,000 to $2,000 each. That’s what I did when I did this for a living. It wasn’t my money that I was spending!

You fill your server with drives, because many hardware RAID controllers don’t allow you to add disks your RAID after it is already configured, up, and running. ZFS doesn’t allow this, either. If you’re running FreeNAS and RAID-Z2, it is often more economical to buy as many drives as you can when you build your server.

Linux’s MD RAID is capable of adding additional disks to an existing array. If you have four disks in your RAID 6 or RAID 10 array, you can add a fifth and grow your space.

You can add disks to your FreeNAS server with ZFS, but you can’t do it one disk at a time. You have to create an additional storage pool. Your new RAID-Z2 pool will dedicate two more disks to redundancy, so you’ll have four redundant disks in your pair of zpools. This is wasteful!

- Adding Another Disk to the RAID 10 on My KVM Server at patshead.com

You should still use FreeNAS and RAID-Z2

Brian likes FreeNAS, because he likes the user-friendly web interface. We both like ZFS, because it is a solid filesystem, and its block level checksums are awesome. I don’t believe there is a safer place to store your data than on a properly configured server running ZFS.

For me, the web interface just gets in my way. I know how to configure all this stuff at the command line, and it is easy for me to set things up exactly the way I want them. I bet there is FreeNAS-like Linux distribution out there, but I hate recommending anything I haven’t tried myself, so I’m not even going to try.

If setting up Linux’s RAID and LVM layers via the command line looks like a daunting task to you, then I would definitely point you towards giving FreeNAS and RAID-Z2 a shot.

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- DIY NAS: 2019 Edition at briancmoses.com

Why are you using RAID 10 on Linux, Pat?!

I’m not a fan of BSD. We’ll skip that, though, because my problems with BSD and FreeBSD are well outside the scope of this article!

My storage needs used to be quite modest. My most sizeable collection of important data was the RAW photos from my DSLR. I was only collecting about 120 GB of new photos every year. A couple of terabytes would last me quite a few years.

I decided it would be best to use a pair of 4 TB disks in a RAID 10 array. Using RAID 6 would have been fine, but then I would need to start with at least four disks, and I didn’t want to do that. Two disks is a lot cheaper than four!

Then I started flying FPV freestyle quadcopters. Now I collect more than a terabyte of GoPro footage every year. I’ve added a third disk to my RAID 10 array, and I’ll need to add a fourth sometime next year. This was unexpected!

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- Can You Run A NAS In A Virtual Machine? at patshead.com

- DIY NAS: 2019 Edition at briancmoses.com

Cost isn’t the only advantage to adding disks as you need them

I wrote about this at length on in my blog post about adding a disk to expand my RAID 10 array. Sure, there is money to be saved if you can get away with only buying the disks that you need today, then adding more disks as you run out of space in the future. Hard drives get larger and cheaper as time goes by.

That’s not my favorite advantage, though. I currently have only three disks in my KVM server at home. The original two disks are now more than four years old. The third disk is a little more than six months old.

Old disks are scary disks. To me, five year old disks are absolutely terrifying. If you add storage one disk at a time as you need it, you’ll space out the age of your disks.

I’m going to have to add storage again in about 12 months. At that point, I’ll have two disks that are more than five years old, one disk that is nearly two years old, and a single brand new disk.

Disks are more likely to fail as they get older. Starting small and growing to meet your needs helps keep the average age of the disks in your array lower.

If I bought four disks when I built this server, every disk would be starting to look fragile by now. I’d be seriously considering just replacing every disk next year!

Conclusion

I’m surprised that you’ve made it this far! I expect most people stopped reading when I told them to just stop thinking about it and use RAID-Z2 or RAID 6! It is all I should really need in my own server at home. I probably wouldn’t have even bothered to use lvm-cache at all, except that it makes for more interesting blog posts!

Is RAID-Z2 enough for your use case at home, or do you need more performance? Did you see much of a performance bump when using lvm-cache or other SSD caching layer? What’s your workload like? Tell us about it in the comments, or stop by the Butter, What?! Discord server to chat with me about it!

- Pat’s NAS Building Tips and Rules of Thumb

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- DIY NAS: EconoNAS 2019 at briancmoses.com

- DIY NAS: 2019 Edition at briancmoses.com

- Can You Run A NAS In A Virtual Machine? at patshead.com

- My Backup Strategy for 2013 - Real Time Off-Site Backups at patshead.com

- Backing up my FreeNAS to Backblaze B2 at briancmoses.com

- Building a Cost-Conscious, Faster-Than-Gigabit Network at briancmoses.com

- Maturing my Inexpensive 10Gb network with the QNAP QSW-308S at Brian’s Blog

- 40-Gigabit Infiniband: An Inexpensive Performance Boost For Your Home Network