Should I Upgrade to 40-Gigabit Infiniband?

I’m watching my friend Brian work hard today. Not in person. I’m watching him post photos of his progress on Instagram and Twitter. What is Brian working on?

He’s working on a sort of sideways upgrade. Four years ago, Brian and I both installed faster-than-gigabit network equipment in our home offices. My blog about upgrading to Infiniband is about two months older than his 10gbe blog, so I’m going to assume I was his inspiration.

To save money, Brian used decommissioned server-grade 10-gigabit Ethernet adapters. He had three machines to connect, so he just had to make sure each machine had two ports. Then he just plugged every machine into every other machine, and he had 10-gigabit network speeds between his most important machines.

This was a huge cost savings, because even small 10-gigabit switches cost nearly $1,000 at the time. I don’t remember what he paid for his new switch, but it was definitely a reasonable value. Four years makes a big difference!

- Maturing my Inexpensive 10Gb network with the QNAP QSW-308S at Brian’s Blog

- Infiniband: An Inexpensive Performance Boost For Your Home Network at patshead.com

- IP Over InfiniBand and KVM Virtual Machines at patshead.com

- 40-gigabit Infiniband cards on eBay

- 8-port 40-gigabit Infiniband switches on eBay

Do I need to beat Brian’s network speeds?

In theory, I already have. I already have 20-gigabit Infiniband cards in my desktop and virtual machine host here in my office. 20 is more than 10, right?

It isn’t quite that simple. My Infiniband cards are 8x PCIe 1.0 cards, and at least one of the two cards is installed in a 4x slot. That cuts my bandwidth in half, and PCIe 1.0 speeds are rather slow, so my Infiniband network is limited by my PCIe bus speeds. Let’s just say that’s right around 8 gigabits per second.

This is pretty comparable to what Brian was seeing with his 10gbe cards. He’s going to be using more modern chipsets now. What if he can eke out another gigabit or two? Will that make my Infiniband network feel slow?!

- Building a Cost-Conscious, Faster-Than-Gigabit Network at Brian’s Blog

- Maturing my Inexpensive 10Gb network with the QNAP QSW-308S at Brian’s Blog

Doubling my speed would be quite cheap!

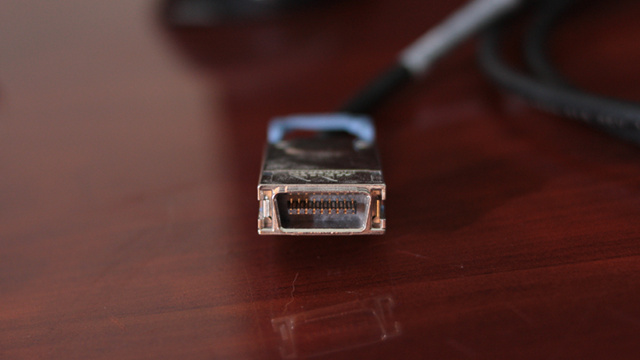

I checked eBay. Used 40-gigabit Infiniband cards cost between $20 and $30, and a short QSFP+ cable would add another $20 or so. These cards are all 8x PCIe 2.0, so they would run twice as fast as my 20-gigabit cards. That would put me at around 16 gigabits per second.

If I could manage to get both cards into 8x slots, that could double my speed again to 32 gigabits per second. That’s right around the limit of 40-gigabit Infiniband: the signaling speed is 40 gigabit, but overhead means it will only transfer data at 32 gigabits per second.

If you’re following in my Infiniband footsteps, you should definitely move up to 40-gigabit Infiniband cards. They cost about as much as my 20-gigabit cards cost me four years ago. There’s not much reason to skip this upgrade!

- Infiniband: An Inexpensive Performance Boost For Your Home Network at patshead.com

- IP Over InfiniBand and KVM Virtual Machines at patshead.com

- 40-gigabit Infiniband cards on eBay

- 8-port 40-gigabit Infiniband switches on eBay

What would double or quadruple speed buy me?

This is the problem. The only place where I would notice an increase in speed is when moving files to or from my NAS virtual machine. How much faster can my NAS get?

My virtual machine host has four 7200 RPM disks in a RAID 10. I am using a pair of 240 GB Samsung 850 EVO SSDs in a mirror as an LVM cache layer. At best, the spinning platters can push 300 to 400 megabytes per second when reading sequentially.

The SSDs are each good for around 350 megabytes per second. That’s roughly true for both sequential or random reads and writes.

Sometimes everything lines up right. The SSDs do some of the caching while the spinning disks are also being written to at full speed. If I were lucky, that might max out at something in the ballpark of 1.4 gigabytes per second. It probably wouldn’t hold that speed for long, if at all, but I’d bet that’s the upper limit.

I can’t seem to find a CIFS test or benchmark on my blog, but a tweet of mine from 2017 agrees with my memory. A single thread hitting my NAS tends to max out somewhere around 300 megabytes per second, and more threads bring that up to more than 700 megabytes per second.

That 700-megabyte-per-second number is approaching the theoretical maximum speed of my PCIe bus. Both machines have PCIe 3.0 slots, but the cards are only PCIe 1.0, and I only get to use half the available lanes on these cards.

wonko@zaphod:/mnt/platter/isos$ dd bs=4M if=CentOS-7-x86_64-DVD-1503-01.iso of=/dev/null

1027+1 records in

1027+1 records out

4310695936 bytes (4.3 GB, 4.0 GiB) copied, 5.97425 s, 722 MB/s

NOTE: I seem to get 327 megabytes per second on a first read, 6 gigabytes per second on the second read, then 720 megabytes per second when I drop the local caches on the recieving end and the file is still primed in the NAS VM’s cache. My rarely accessed ISO images are unlikely to be on the SSD cache.

The 300-megabyte-per-second number is the important one. That’s just where a single threaded read of NFS or CIFS tends to max out for me. That is my most common use case, too.

I could spend $80. I could spend the time pulling these machines out from under my desk and installing new Infiniband cards. I just might double my maximum throughput to well over one full gigabyte per second, and that would be cool.

I’d still be looking at 300 megabytes per second when dumping video from my GoPros or editing videos in DaVinci Resolve.

Ugh!

- Infiniband: An Inexpensive Performance Boost For Your Home Network at patshead.com

- IP Over InfiniBand and KVM Virtual Machines at patshead.com

- 40-gigabit Infiniband cards on eBay

- 8-port 40-gigabit Infiniband switches on eBay

I don’t want to take apart my machines

I hate opening up my machines. They’re both tucked in to a cart-type thing next to my desk. My 3D printer lives on top of it. Many of the cables aren’t long enough to allow me to pull the computers far enough forward to unplug things, so I have to contort myself and get behind there. I hate doing that.

I need a good reason to do this work. I’m still expecting that 320-megabyte-per-second number to be the same no matter how fast my network gets. That’s just the combined average read speed of two of my RAID disks. The network isn’t my bottleneck. The disks are!

This is almost always true for most home servers. When it isn’t true, it is usually close enough. My read speed straight from the disk is only three times the speed of gigabit Ethernet.

Conclusion

The important takeaway here is that you shouldn’t buy used 20-gigabit QDR Infiniband cards any longer. You should be buying 40-gigabit FDR Infiniband cards, because they’re twice as fast and don’t cost more than slower cards!

I’m thinking I need to put this upgrade on my list of projects. I think it is worth $80 and a couple of hours farting around under my desk just to update the old Infiniband post.

What do you think? Should I work on ordering parts for this upgrade? Let me know in the comments, or stop by the Butter, What?! Discord server to chat with me about it!

- Infiniband: An Inexpensive Performance Boost For Your Home Network at patshead.com

- IP Over InfiniBand and KVM Virtual Machines at patshead.com

- Building a Cost-Conscious, Faster-Than-Gigabit Network at Brian’s Blog

- Maturing my Inexpensive 10Gb network with the QNAP QSW-308S at Brian’s Blog

- 40-gigabit Infiniband cards on eBay

- 8-port 40-gigabit Infiniband switches on eBay