I Ordered 40-Gigabit Infiniband Cards

UPDATE: I goofed. The HP cards are FlexibleLOM cards. Not PCIe. I’m assuming that I looked at so many cards that I just wound up not paying close enough attention to the photos of the ones I actually chose. This is a bummer, because the 56-gigabit FlexibleLOM cards were $13 each. Comparable 56-gigabit PCIe cards are $70 or more! I wound up ordering 40-gigabit Infiniband cards for about $30 each. They won’t do 40-gigabit Ethernet, but they are the same line of PCIe 3.0 ConnectX-3 cards as the $13 HP cards, so in my own use case, I won’t notice the difference.

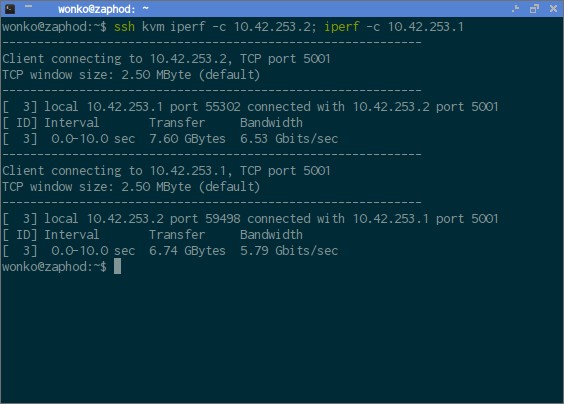

UPDATE: I ordered the correct 40-gigabit Infiniband cards. They were delivered. They’ve been installed. I’ve tested them. I have indeed doubled my network performance!

I’m too excited to wait until they arrive to start writing words about this. I’ve been running with 20-gigabit Infiniband cards in my desktop and virtual machine server for nearly five years. It only cost me $55, and I wound up seeing CIFS, Samba, and NFS speeds of up to a little over 700 megabytes per second. That’s right around 6 gigabits per second.

I’m not using my Infiniband cards to their fullest. I’m running IP-over-Infiniband. This sort of brings your Infiniband network stack down into a single thread. These older Mellanox MT25408 are 8x PCIe 2.0 cards. The card in the server is in a 16x PCIe 2.0 slot, and the card in the desktop is in a 4x PCIe 3.0 slot. That means I’m limited to 4x PCIe 2.0 speeds.

I remember doing math and guessing that my 7 gigabit says I did something wrong when I did that math five years ago.

- 40-Gigabit Infiniband: An Inexpensive Performance Boost For Your Home Network

- InfiniBand: An Inexpensive Performance Boost For Your Home Network

- IP Over InfiniBand and KVM Virtual Machines

- Building a Cost-Conscious, Faster-Than-Gigabit Network

Infiniband costs a lot less now!

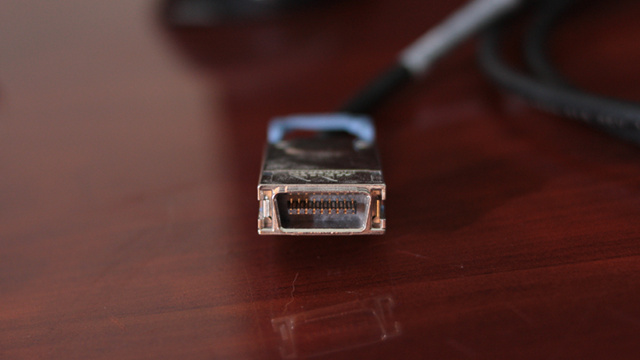

I ordered a pair of dual-port HP 764737-001 Infiniband cards for $15. I believe these are Mellanox ConnectX-3 Pro cards.

A single Mellanox QSFP+ cable was $18.95. Shipping was free. Taxes brought my total price up to $36.75. That’s about $20 less than I paid for my 20-gigabit Infiniband setup five years ago. The new cards will be here Tuesday.

Used 40-gigabit FDR switches aren’t as inexpensive as 20-gigabit DDR Infiniband switches were five years ago. I remember seeing several 8-port DDR switches on eBay for around $100. I’m not finding much in the way of FDR switches for under $200, but these tend to be 36-port switches. I don’t know what I’d ever manage to do with 36 ports at home, but at least you’re getting something for the extra money.

I don’t think I mentioned this yet. This Infiniband gear is all used. 40-gigabit Infiniband FDR was the new hotness back in 2011. Folks that were using this used gear are probably upgrading to 200-gigabit HDR Infiniband gear now.

- HP 40/56-Gigabit FlexibleLOM Infiniband Cards at eBay (*NOT PCIe!!!)

- Mellanox QSFP+ Cables at eBay

Are these cards 40-gigabit or 56-gigabit?!

I keep saying these are 40-gigabit cards. They’re actually 56-gigabit cards.

I could go back and correct myself, but this gives me an opportunity to explain why this doesn’t matter to me.

I’m assuming that my current setup is limited to around 7 gigabits per second due to the slots the cards are in. I’m guessing I’d be hitting 14 gigabits per second or so if I could get both cards into 8x PCIe slots. There’s no easy or cheap way for me to do this.

[ 12.441709] mlx4_core 0000:06:00.0: 8.000 Gb/s available PCIe bandwidth, limited by 2.5 GT/s PCIe x4 link at 0000:02:04.0 (capable of 16.000 Gb/s with 2.5 GT/s PCIe x8 link)

[ 12.575456] <mlx4_ib> mlx4_ib_add: mlx4_ib: Mellanox ConnectX InfiniBand driver v4.0-0

[ 12.576175] <mlx4_ib> mlx4_ib_add: counter index -1 for port 1 allocated 0

[ 12.576243] <mlx4_ib> mlx4_ib_add: counter index -1 for port 2 allocated 0

NOTE: I’m pretty sure my 20-gigabit cards are PCIe 2.0, but the kernel claims they’re operating at PCIe 1.0 speeds. I might see a really big bump in speed next week! The original blog post dmesg output says PCIe 2.0 and 2.5 GT/s on the same line. 2.5 GT/s is PCIe 1.0 speed. I wonder if this generated some confusion in my brain at the time when I was doing my math?!

The new HP cards are 8x PCIe 3.0 cards. The card in my server will reach 8x PCIe 2.0 speeds, while the card in my desktop will reach 4x PCIe 3.0 speeds. That’s twice as fast as what I have available today, and if my five-year-old math was correct, I’ll be doubling my throughput when I install the new gear.

I haven’t recently checked the documentation on the PCIe slots I have to use. It is possible that my memory is incorrect. That might be a bummer!

Assuming I was correct, and assuming there’s no other driver or implementation improvement that comes along for the ride with the new cards, I am expecting to see around 14 gigabits per second over NFS.

That’s far short of 40-gigabit or 56-gigabit. It is faster than 10-gigabit Ethernet, and it doesn’t cost more, so I’m happy.

I should also mention that I’m losing about one gigabit per second when connecting to my NAS VM. IPoIB is at layer 3, so I can’t bridge my virtual machines to that interface. I have to put the virtual machines using Infiniband on their own subnet and route to it. This eats up some performance.

- 40-Gigabit Infiniband: An Inexpensive Performance Boost For Your Home Network

- InfiniBand: An Inexpensive Performance Boost For Your Home Network

- IP Over InfiniBand and KVM Virtual Machines

- Building a Cost-Conscious, Faster-Than-Gigabit Network

Will I notice a doubling in speed?

I will not. This isn’t a necessary upgrade. The old Infiniband blog post is getting old. No one should buy the PCIe 2.0 20-gigabit hardware today.

I can have faster throughput for $40, and I can write a more up-to-date Infiniband blog post, so this seems like a good deal and a good idea.

I usually see around 300 megabytes per second over the NFS connection to my NAS virtual machine. This is just how fast my disks are. My KVM host machine is built to have the fastest disk access, though it is running lvmcache on a pair of mirrored SSDs. The server has four 7200 RPM disks in a RAID 10.

If I read a few gigabytes from my NAS VM, I’ll see around 300 megabytes per second. If I immediately repeat the exact same transfer, it will usually break 700 megabytes per second, because the data is cached in RAM on the server.

It will be cool to see that break 1,400 megabytes per second, but that won’t be something I notice in the real world.

- 40-Gigabit Infiniband: An Inexpensive Performance Boost For Your Home Network

- InfiniBand: An Inexpensive Performance Boost For Your Home Network

- IP Over InfiniBand and KVM Virtual Machines

- Building a Cost-Conscious, Faster-Than-Gigabit Network

Conclusion

What do you think? Is my memory correct? Will the newer PCIe 3.0 card live in a faster slot in my desktop? Will this double my speed? Will things stay the same? Even if my speeds double, will it be worth the effort of opening these computers up? Do you think I’ll see more than two times my current performance?

Let me know in the comments, or stop by the Butter, What?! Discord server to chat with me about it!

- 40-Gigabit Infiniband: An Inexpensive Performance Boost For Your Home Network

- InfiniBand: An Inexpensive Performance Boost For Your Home Network

- IP Over InfiniBand and KVM Virtual Machines

- Building a Cost-Conscious, Faster-Than-Gigabit Network

- HP 40/56-Gigabit FlexibleLOM Infiniband Cards at eBay (*NOT PCIe!!!)

- Mellanox QSFP+ Cables at eBay